Meta 's blog over ICT in onderwijs en meer

Doing more cool stuff with Maple TA without being a Maple Expert

Hybrid Assessment: Combining paperbased and digital questions in MapleTA

In August 2016 we upgraded to Maple TA version 2016. A moment I had been looking forward to, since it carried some very promising new features regarding essay questions, improved manual online grading and a new question type I requested: Scanned Documents.

Some teachers would like to have students make a sketch or show their steps in a complex calculation. Because these actions are hard to perform with regular computer room hardware (mouse and keyboard only) it would be nice to keep those actions on paper, but how to cleverly connect this paper to the gradebook. In my blogpost from may 2013 I mentioned an example of a connecting feature from ExamOnline that is now implemented in Maple TA.

Last friday we had the first exam that actually applied this feature in an exam: about a hundred students had to mark certain area’s in an image of the brain on paper. The papers were collected, scanned and as a batch uploaded to MapleTA’s gradebook. The exam reviewers found the scanned papers in the gradebook and graded the response manually.

How does this work?

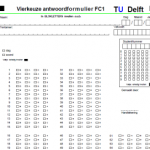

First, in order to use the batch upload feature, you need to create a paper form that can be recognized in the scanning process. Our basis was the ‘Sonate’-form (see image 1). Sonate is our multiple choice test system for item- and test analysis. This form has certain markings that can be recognized by the scan software, Teleform.

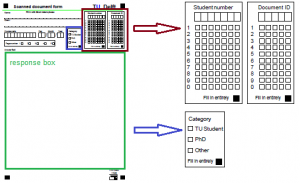

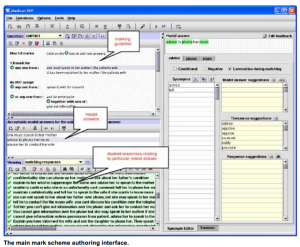

This form was converted into a ‘Maple TA form’ (see image 2). The form was adjusted by creating a response box (in green), an entry for student and Document ID (in brown) and a user category (in blue). The document ID is created by MapleTA. It is a unique numerical code per student in the test.

In the scan software a script is written that creates a zip-file containing all the scanned documents and a CSV-file that tells MapleTA what documents are in the zip. As soon as the files are created and saved, automatically an e-mail is sent to support. The support desk uploads the files to MapleTA and notifies the reviewers that all is set.

In the gradebook a button is shown. When you click it, it will open the pdf file so the reviewer can grade the student’s response.

In the MapleTA help, this process is described in detail. Here you also find how to manually upload the files to the gradebook. Maplesoft extended this feature by also allowing students to upload their own ‘attachment’ this can be any of the following file types:

This feature is very promising for our online students. When using online proctoring at the moment they use e-mail to send attachments. Now they might use MapleTA . As long as the file type is allowed.

MapleTA and Mobius User Summit 2016 in Vienna

Last week I attended Maplesofts MapleTA and Mobius User Summit 2016 in Vienna. Those were two and a half days well spend. This was already the third one after Amsterdam (2014) and New York (2015). And is good to meet the same people from the other summits, but also good to see new faces as MapleTA becomes more popular in Europe.

For me the summit started by attending two training sessions, or should I say: demo sessions. The first one was about Advanced Question Creation in MapleTA. Jonathan zoomed in on maple graded questions. Since I recently set my first steps in maple graded (see my blog post doing cool stuff..) I welcomed it very much. He addressed some differences between coding in Maple and MapleTA. Providing us with useful tips how to get around them (involving the general solution using the ‘convert’- procedure).

I got quite jealous as he said that at Birmingham they created grading scripts they can call upon in the ‘grading code field’ so creating a maple graded question is a lot easier. Unfortunately these scripts cannot easily be transferred to other installations of MapleTA. The good news: He said he is talking to Maplesoft about integrating them in the software. Let’s hope it gets picked up. Jonathan also referred to the MapleTA Community were any user can pose questions or respond to others problems. It is active though still in beta.

The second demo was on creating lessons and a slide show Mobius by Aaron. Although we at Delft had done some pilot projects at the beginning of the year, I was pleasantly surprised by the improvements of some features. The software is not officially released just yet and the first version will not have all of the features Jim sketched us during the Mobius Roadshow in September. It holds quite a promise. At TU Delft will continue piloting the software.

The conference on thursday and friday contained several user presentations covering the themes of the conference:

- Shaping Curriculum

- Content Creation (with Mobius)

- User Experience Mobius

- Integrating with your Technology

- The future of Online Education

Eight different institutions presented their implementation, course examples, their success stories and troubles. Most interesting though, were the moments between the different themes, when there was plenty of time to talk with the other participants: elaborating on their presentation or just getting to know each other. But also the opportunity to talk to Maplesoft people addressing some issues and near future developments was very valuable.

On friday Steve Furino (University of Waterloo) had an interactive session on what the participants top 3 of future developments should be. This resulted in a list of about 30 items (that did contain multiple entries on the same topic. Usability, regrading and universal coding across Maple and MapleTA were top 3.

Especially Mobius initiated a lot of request from fellow participants to work together creating materials and exchanging them. We saw some lovely examples from Waterloo: chemistry for engineers, precalculus and computer science. Feel free to click those links and check out the courses. Waterloo is thinking of setting up a Workshop focused on developing online STEM Courses (using Mobius). The idea is that participants will leave with a completed online STEM course that they can implement immediately. This workshop should last somewhere between 2 and 4 weeks on site (Waterloo, Canada). If you would like to participate in their viability evaluation follow the link. To sounds like a great idea to me, but wonder wether being away for 4 weeks would be a problem for most people that are interested.

I left the conference full of ideas and a personal action list. Already looking forward to the next User Summit (probably in London – no date set yet). I hope to have made a lot of progress on my plans by then. So I’ll have interesting experiences to share.

Attending the Educause Learning Technology Leadership Institute

This month I got the chance to attend the Educause Learning Technology Leadership Institute (LTL-2016) in Austin Texas. None of my colleagues were familiar with this institute so I got to be the guinea pig. To me it seemed interesting to be in an institute with all people working in the same line of work, coming from different schools or universities (in fact, rule is that only one participant per school is allowed) who will work in a team on a so called ‘culminating’ project.

A couple of weeks prior to the institute a yammer network environment was set up, so everybody could already introduce themselves. During the week that we all would be in Texas, we would share information and have discussions through yammer.

Major goal for this week was networking and sharing experiences in our daily practice. With all of us being in the same line of work, discussions were very interesting and it was rather easy to relate to the problems posed by both faculty and participants. The vast majority of the participants were American, coming from all kinds of schools and universities all over the US. Only three participants from Europe/Middle East: University of Edinburgh, Northwestern University (in Qatar) and TU Delft.

To my surprise there were almost 50 participants divided over 7 round tables. In total 7 members of faculty that would lead the institute. They were typically participants of previous versions of this institute and could tell what participating in this LTL institute had meant for their career and building a network of relations throughout the country.

Each day, coming into the room, you had to go find your name tag on one of the tables, making sure you would talk to as many people as possible and not go for the same group all the time. For the first two days: this worked out fine: The introduction of a specific topic, followed by a small assignment or group discussion, would allow you to get to know a bit more about the people at your table. But since you were supposed to work in a (fixed) team on a joint project (the people from the table you sat at on the second day) this idea got kind of distorted over time: You tend to want to be with your team to discuss the impact of the topics being ‘taught’ by the faculty members, rather than yet another person whom you had to ‘get to know’ first. On top of that most of the topics of the third and fourth day were shaped as lengthy presentations leaving hardly any time for discussion.

Working in the team on our project was great. Our team had good discussions about improving digital literacy for both students and teachers. Going into depth and having to ‘create’ our own school, writing a plan on how to improve digital literacy and finding the right arguments to defend our plans in front of the stakeholders (dean, provost, CIO, CFO, etc.) were quite interesting. It gave me a more profound insight in the differences and similarities between the European and North American universities.

On the last day I spoke to the participant from Edinburgh and we discussed (shortly) whether an European version of this institute could be a good idea for the future. From a networking perspective I believe this could be a very good idea. I would be interested on being involved setting it up, so Who knows….

Doing cool stuff with MapleTA without being a Maple expert

I have been using MapleTA for a couple of years now and so far I did not use the Maple graded question type that much. I did not feel the need and frankly I thought that my knowledge of the underlying Maple engine would be insufficient to really do a good job. But working closely with a teacher on digitizing his written exam taught me that you don’t have to be an expert user in Maple in order to create great (exam) questions in MapleTA.

A couple of months ago we started a small pilot: A teacher in Materials Science, an assessment expert and me (being the MapleTA expert) were going to recreate a previously taken, written exam into a digital format. Based on the learning goals of the course and the test blue print we wanted to create a genuine digital exam, that would take an optimal benefit of being digital and not just an online version of the same questions. The questions should reflect the skills of the students and not just assess them on the numerical values they put into the response fields.

Being so closely involved in the process and together finding essential steps and skills students need to show, really challenged me to dive into the system and come up with creative solutions mostly using the adaptive question type and maple graded questions.

Adaptive Question Type – Scenarios

The adaptive question type allowed us to create different scenario’s within a question:

- A multiple choice question was expanded into a multiple choice question (the main question) with some automatically graded underpinning questions. Students needed to answer all questions correctly in order to get the full score. Missing one question resulted in zero points. Answering the main question correctly would take the student to a set of underpinning questions. Failing on the main question, would dismiss the student from the underpinning questions and take them directly to the next question in the assignment (not having to waste time on the underpinning questions, since they needed to answer all questions correctly)

This way the chance of guessing the right answer was eliminated, resulting in not having to compensate for guessing in the overall test score. The problem itself was posed to the students in a more authentic way: Here is the situation, what steps do you need to take to get to the right solution. Posing that main question first requires the students to think about the route, without guiding them along a certain path. - A numerical question was also expanded into a main-sub question scenario. This time, when students would answer the main question correctly (requiring to find their own strategy to work towards the solution) they are taken to the next question since they’ve shown they succeeded in solving the problem. Those who did not get to the correct answer, were given a couple of additional questions, to see at what point they went wrong and thus being able to solve the problem with a little help. Still being able to gain a partial score on the main question.

- Another adaptive question scenario would require all students to go through all the main-sub questions. Using the sub questions either to underpin the main question or as a help towards the right solution by presenting the steps that need to be taken. Again allowing students to show more of their work and thoughts than a plain set of questions, without guiding students to much on ‘how to’ solve the problem.

So far the adaptive questions, but what about the maple graded?

Maple graded – formula evaluation and Preview

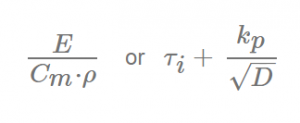

The exam required students to type in a lot of (non mathematical) formulas used to compute values for material properties or deriving a materials index to select the material that best performs under particular circumstances.

For example:

Maple can evaluate these type of questions quite well, since the order of different terms will not influence the outcome of the evaluation. We first tried the symbolic entry mode, but try-out sessions with students turned out that the text entry mode was to be preferred. Since it would speed up entry of the response.

Then we discovered the power of the preview button. Not only did it warn students for misspelled greek characters (e.g. lamda in stead of lambda), misplaced brackets or incorrect Maple syntax, it also allowed us to provide feedback saying that certain parameters in their response should be elaborated. This could be done by defining a Custom Previewing code, like:

if evalb(StringTools[CountCharacterOccurrences]("$RESPONSE","A")=1)

then printf("Elaborate the term A for Surface Area. ")

else printf(MathML[ExportPresentation]($RESPONSE));

end if;

This turned out to be a powerful way to reassure students their response would not be graded incorrect through syntax mistakes or not elaborating their response to the right level.

Point of attention: you need to make sure that the grading code takes into account that students might be sloppy in their use of upper and lower case characters and tend to skip subscripts if that seems insignificant to them. We used the algorithm to prepare accepted notations, writing OR statements in the grading code:

Algorithm:

$MatIndex1=maple("sigma[y]/rho/C[m]");

$MatIndex2=maple("sigma[y]/rho/c[m]");

Grading Code:

evalb(($MatIndex1)-($RESPONSE)=0) or evalb(($MatIndex2)-($RESPONSE)=0);

Through the MapleCommunity I received an alternative to compensate for the case sensitivity:

Algorithm:

$MatIndex1= "sigma[y]/rho/C[m]";

Grading Code:

evalb(parse(StringTools:-LowerCase("$RESPONSE"))=parse(StringTools:-LowerCase("$MatIndex1")));

Automatically graded ‘key-word’ questions in an adaptive section

In order to use adaptive sections in a question all questions must be graded automatically. This means you cannot pose an essay question, since it requires manual grading. We had some questions were we wanted the students to explain their choice by writing a motivation in text. Both the response to the main question and the motivation had to be correct in order to get full score. No partial grading allowed.

Having a prognosis of nearly 600 students taking this exam, the teacher obviously did not want to grade all these motivations by hand. An option would have been to use the ‘keyword’ question type that is presented in the MapleTA demo class, but since it is not a standard question type, it could not be part of an (adaptive) question designer type question. The previously mentioned Custom Preview Code inspired me to use a Maple graded question instead. Going through Metha Kamminga’s manual I found the right syntax to search for a specific string in a response text and grade the response automatically. Resulting in the following grading code:

evalb(StringTools[Search]("isolator","$RESPONSE")>=1) or

evalb(StringTools[Search]("insulator","$RESPONSE")>=1);

Naturally, the teacher should also review the responses that did not contain these key words, but certainly that would mean only having to grade a portion of the 600 student responses, since all the automatically-graded-and-found-to-be-correct would no longer need grading. Thus saving a considerable amount of time.

Preliminary conclusions of our pilot

MapleTA offers a lot of possibilities that require little or no knowledge of the Maple engine, but

- It requires careful thinking and anticipation on student behavior.

- Make sure to have your students practice the necessary notations, so they are more confident and familiar with MapleTA’s syntax or ‘whims’ as students tend to call it.

- Take your time before the exam to define the alternatives and check in detail what is excepted and what is not. This saves you a lot of time afterwards. Because unfortunately making corrections in the gradebook of MapleTA is devious, time consuming and not user friendly at all.

Opzet gebruikersgroep voor Maple T.A.

Als TU Delft hebben we het initiatief genomen om een gebruikersgroep te gaan vormen voor MapleT.A.

In het hoger onderwijs wordt Maple T.A. op vele plekken ingezet. Maple T.A. krijgt met iedere versie uitgebreidere mogelijkheden om digitaal toetsen naar een hoger plan te brengen. Om deze mogelijkheden beter te benutten en de her- en der opgedane Maple T.A. kennis en ervaring niet verloren te laten gaan maar juist te delen en te borgen, willen we een gebruikersgroep starten. Met onderstaande enquête willen we graag de behoefte onder Maple T.A. gebruikers peilen m.b.t. deelname aan een gebruikersgroep.

Binnen een paar dagen zijn al bijna 20 reacties binnengekomen vanuit diverse instellingen, dus we zullen zeker gaan starten.

Heb je de enquête gemist, maar ben je wel geinteresseerd, vul m dan alsnog in op: http://goo.gl/forms/4rYF8B41Pt

ATP Conference 2016 – two interesting applications

During my visit at ATP-conference last week, two applications captured my interest: Learnosity and Metacog.

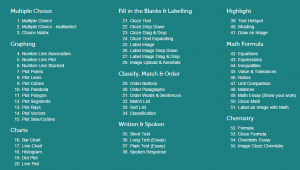

Learnosity is an assessment system that, in my humble opinion, takes Technology Enhanced Item types (TEI’s) to the next level. With 55 different templates for questions, it might be hard to choose which one best suites the situation, but it at least extends beyond the TEI’s most speakers mentioned (drag & drop, hotspot, multiple response and video).

Learnosity can handle (simple) open math and open chemistry questions. That can be automatically graded. Nowhere near the extend that Maple T.A. can, but sure enough usable in lots of situations. It can create automatically graded charts (bar, line and point), graphs (linear, parabolic and goniometric) and has handwriting recognition. Interesting features that should come available in many more applications.

It must be said that handwriting recognition sounds nice, but as long as we have no digital slates in our exam room it can not be appreciated to the full extent (try writing with your mouse and you’ll understand what I mean). But being able to drag a digital protractor or a ruler onto the screen to measure angles or distances is really cool.

For me the most interesting part of this assessment system was the user friendliness. I need to dig deeper to see what we can learn from this system, but it sure is interesting.

Metacog is an interesting application, since it captures the process on getting to the final answer. You can run it while the student is working on a problem. It captures those activities that you define and afterwards you can have the system generate a report on these activities. Participation, behaviour, time on task and sequence of events are just a few of the reports that can be made. This is a very interesting tool to gain learning analytic data. And the system can be integrated onto any online learning activity. Here’s a video that can explain Metacog better.

[vimeo]https://vimeo.com/148498871[/vimeo]

ATP Conference 2016 – A whole different ballgame, so what to learn from it?

This past week me and my test expert-colleague went to the ATP conference 2016 in Florida. ATP stands for the Association of Test Publishers. In the US national tests are everywhere. For each type of education: K12, middle school, high school, college and especially professional education tests are developed to be used nationwide. The crowd at the conference was filled up with companies that develop and deploy tests for school districts or the professional field (health care, legal, navy, military, etc.). I soon found out that these people play major league in test developing, usability studies and item analysis, while we at our university should be lucky if we find ourselves in little league.

Fair is fair: the amount of students taking exams at the university will never come near the amount of test takers they develop for. Reuse of the items developed in our situation is only in some cases a requirement, in many of our cases though reuse is not desirable. And their means: man power, time and money spent is impossible to meet. So what to learn from the Professionals?

Our special interest for this conference were the sessions on Technically Enhanced Item types (TEI’s). Since we are conducting some research into the use of certain test form scenarios, that include the use of TEI’s and constructed response items. For me, the learning points are more on the level of the usability studies, these companies do.

Apparently, the majority of the tests created by these test publishers, consist of multiple choice questions (the so called bubble sheet tests) and test developers overseas are starting to look towards TEI’s for more authentic testing. So TEI’s were a hot topic. In my first session I was kind of surprised that all question types other than multiple choice were considered to be TEI’s. Especially Drag & Drop items were quite popular. I did not think the use of this kind of question types would cause much problems for test takers, but apparently I was wrong.

Usability of these drag & drop items turned out to be much more complicated than I thought: Is it clear where students would need to drop their response? Will near placement of the response cause the student to fail or not: What if the response is partly within and partly outside the (for the student invisible) designated area? How accurate do they need to be? When to use the hotspot and when to drag an item onto an image? Do our students encounter the same kind of insecurities as the American test takers? Or are they more savvy on this matter. We do experience that students make mistakes in handling our ‘adaptive question’-type and since we are introducing different scenarios, I am aware even more than before, that we should be more clear to our students what to expect. We got a better idea what to expect when conducting our usability study on ‘adaptive questions’ and don’t assume that taking a test with TEI’s is as easy as it seems.

This conference made me aware that we need to make an effort in teaching our instructors and support workers about the principles of usability and be more strict about the application of these principles. This fits perfectly into our research project deliverables and the online instruction modules were are about to set up.

Overview of online proctoring solutions

Eduventures published an update on remote exam proctoring, providing an overview of US based online proctoring providers. Roughly the systems can be divided into three catagories:

- Fully Automated Solutions: The computer monitors students and determines whether they are cheating.

- Record and Review: Sessions are recorded as the computer monitors students. A human can then review the video at any time afterward.

- Fully Live: Students are on video and watched remotely by a live proctor.

There seems to be a tendency to move towards fully automated solutions, since it is more cost efficient and the technical solutions become more and more available, like multi factor authentication, eye-tracking and automated cheat detection. There are also systems that integrate into the institutions test delivery system or LMS.

The systems that still use live proctoring (whether it is a live proctor or a review) also use advanced technologies to help the proctor detect cheating.

Eduventures promises to keep covering these systems and providers so the coming months I expect more in depth information on developments in this sector. Interesting!

Digital exams – essays and short answer questions

Now that TU Delft invested into creating large computer rooms for digital exams, it is of the essence to make optimal use of these rooms. Still the majority of the exams are paper based and not all exams can or should be converted into automatically marked exams. Seeing the positive response of students towards computer exams I wrote about in my previous blog post, I decided to search for tools for taking exams existing of essay and short answer questions, combined with functionality on easy onscreen grading.

I got inspired by Roel Verkooijen’s presentation about Checkmate at a meeting of the Special Interest Group Digital Assessment. Working with this system, the exam itself is still taken on (special) paper, the review of the exams is done onscreen. The paper exams are scanned. In the reviewing screen, the question, the model answer and the scanned student response are shown and the reviewer can add comments. The system keeps track of the progress of reviewing and facilitates working with different reviewers. Students can review the grading online and even discuss the comments made within the same system. I find that the major disadvantage of Checkmate is that this system still relies on student’s handwriting.

Checkmate is tailormade for Erasmus MC, so unfortunately not available to other institutions.

At the moment I’m experimenting with CODAS. This is text grading software that ranks documents according to their level of similarity with certain selected documents; to what degree do they resemble good examples or the poor examples. With CODAS an instructor only needs to grade about 30 to 50 assignments in order to get a reliable ranking of the documents. CODAS can also perform a plagiarism check. The experiment I’m doing is about finding out what kind of exam questions, or better, what kind of short answers can be revised reliably with this system. CODAS requires digital input (*.txt format), put the system does not provide that itself.

My search for assessment systems that specialize in open and short answer questions led me to a a JISC-study on Short answer marking engines. This study discusses the use marking engines for short questions. Two of these engines I would like to discuss:

- C-rater by Educational Testing Service (ETS) uses natural language processing (nlp) to assess a student’s answer. It can be used to grade open questions that require a converging answer. It can not handle questions were a student’s opinion or examples from the student’s own experience is required. Even though C-rater corrects for misspelling, use of synonyms, paraphrasing and context it does not reach the same level of reliability of human marking. The exam designer and the answer model builder need to be quite knowledgeable on the C-rater’s mechanisms in order to get a good result. Another drawback is that the system needs up to 100 scored responses to build the answer model. The system is still being improved.

- ExamOnline by Intelligent Assessment Technologies (IAT) uses marking schemes (model answers) that are added by human markers to assess a student’s response. The system provides an exam delivery system and facilitates onscreen grading. The grading is a combination of computer and human marking. Another interesting feature this software offers is the possibility to add hand-drawn sketches to a digital exam. When a sketch answer is required, the system shows the student a unique code that the student needs to fill in on the paper sketch. Afterwards, when the sketches are scanned the system automatically connects the scan to the student’s exam so the grader can assess the sketch onscreen.

I am very curious what OnlineExam can offer, I hope to be able to experiment with this software to see if it might meet our needs.

On top of that, in 2 weeks I hope to get a sneak preview of MapleTA’s new features on essay questions. The current version of MapleTA (8.0) provides a reasonable editor to students answering an essay question, but the grading screen is by far not practical for onscreen grading of short answer questions exceeding 30 words.