Posted in March 2016

ATP Conference 2016 – two interesting applications

During my visit at ATP-conference last week, two applications captured my interest: Learnosity and Metacog.

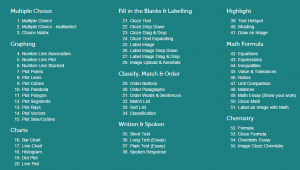

Learnosity is an assessment system that, in my humble opinion, takes Technology Enhanced Item types (TEI’s) to the next level. With 55 different templates for questions, it might be hard to choose which one best suites the situation, but it at least extends beyond the TEI’s most speakers mentioned (drag & drop, hotspot, multiple response and video).

Learnosity can handle (simple) open math and open chemistry questions. That can be automatically graded. Nowhere near the extend that Maple T.A. can, but sure enough usable in lots of situations. It can create automatically graded charts (bar, line and point), graphs (linear, parabolic and goniometric) and has handwriting recognition. Interesting features that should come available in many more applications.

It must be said that handwriting recognition sounds nice, but as long as we have no digital slates in our exam room it can not be appreciated to the full extent (try writing with your mouse and you’ll understand what I mean). But being able to drag a digital protractor or a ruler onto the screen to measure angles or distances is really cool.

For me the most interesting part of this assessment system was the user friendliness. I need to dig deeper to see what we can learn from this system, but it sure is interesting.

Metacog is an interesting application, since it captures the process on getting to the final answer. You can run it while the student is working on a problem. It captures those activities that you define and afterwards you can have the system generate a report on these activities. Participation, behaviour, time on task and sequence of events are just a few of the reports that can be made. This is a very interesting tool to gain learning analytic data. And the system can be integrated onto any online learning activity. Here’s a video that can explain Metacog better.

[vimeo]https://vimeo.com/148498871[/vimeo]

ATP Conference 2016 – A whole different ballgame, so what to learn from it?

This past week me and my test expert-colleague went to the ATP conference 2016 in Florida. ATP stands for the Association of Test Publishers. In the US national tests are everywhere. For each type of education: K12, middle school, high school, college and especially professional education tests are developed to be used nationwide. The crowd at the conference was filled up with companies that develop and deploy tests for school districts or the professional field (health care, legal, navy, military, etc.). I soon found out that these people play major league in test developing, usability studies and item analysis, while we at our university should be lucky if we find ourselves in little league.

Fair is fair: the amount of students taking exams at the university will never come near the amount of test takers they develop for. Reuse of the items developed in our situation is only in some cases a requirement, in many of our cases though reuse is not desirable. And their means: man power, time and money spent is impossible to meet. So what to learn from the Professionals?

Our special interest for this conference were the sessions on Technically Enhanced Item types (TEI’s). Since we are conducting some research into the use of certain test form scenarios, that include the use of TEI’s and constructed response items. For me, the learning points are more on the level of the usability studies, these companies do.

Apparently, the majority of the tests created by these test publishers, consist of multiple choice questions (the so called bubble sheet tests) and test developers overseas are starting to look towards TEI’s for more authentic testing. So TEI’s were a hot topic. In my first session I was kind of surprised that all question types other than multiple choice were considered to be TEI’s. Especially Drag & Drop items were quite popular. I did not think the use of this kind of question types would cause much problems for test takers, but apparently I was wrong.

Usability of these drag & drop items turned out to be much more complicated than I thought: Is it clear where students would need to drop their response? Will near placement of the response cause the student to fail or not: What if the response is partly within and partly outside the (for the student invisible) designated area? How accurate do they need to be? When to use the hotspot and when to drag an item onto an image? Do our students encounter the same kind of insecurities as the American test takers? Or are they more savvy on this matter. We do experience that students make mistakes in handling our ‘adaptive question’-type and since we are introducing different scenarios, I am aware even more than before, that we should be more clear to our students what to expect. We got a better idea what to expect when conducting our usability study on ‘adaptive questions’ and don’t assume that taking a test with TEI’s is as easy as it seems.

This conference made me aware that we need to make an effort in teaching our instructors and support workers about the principles of usability and be more strict about the application of these principles. This fits perfectly into our research project deliverables and the online instruction modules were are about to set up.