Posted in 2013

Digital exams – essays and short answer questions

Now that TU Delft invested into creating large computer rooms for digital exams, it is of the essence to make optimal use of these rooms. Still the majority of the exams are paper based and not all exams can or should be converted into automatically marked exams. Seeing the positive response of students towards computer exams I wrote about in my previous blog post, I decided to search for tools for taking exams existing of essay and short answer questions, combined with functionality on easy onscreen grading.

I got inspired by Roel Verkooijen’s presentation about Checkmate at a meeting of the Special Interest Group Digital Assessment. Working with this system, the exam itself is still taken on (special) paper, the review of the exams is done onscreen. The paper exams are scanned. In the reviewing screen, the question, the model answer and the scanned student response are shown and the reviewer can add comments. The system keeps track of the progress of reviewing and facilitates working with different reviewers. Students can review the grading online and even discuss the comments made within the same system. I find that the major disadvantage of Checkmate is that this system still relies on student’s handwriting.

Checkmate is tailormade for Erasmus MC, so unfortunately not available to other institutions.

At the moment I’m experimenting with CODAS. This is text grading software that ranks documents according to their level of similarity with certain selected documents; to what degree do they resemble good examples or the poor examples. With CODAS an instructor only needs to grade about 30 to 50 assignments in order to get a reliable ranking of the documents. CODAS can also perform a plagiarism check. The experiment I’m doing is about finding out what kind of exam questions, or better, what kind of short answers can be revised reliably with this system. CODAS requires digital input (*.txt format), put the system does not provide that itself.

My search for assessment systems that specialize in open and short answer questions led me to a a JISC-study on Short answer marking engines. This study discusses the use marking engines for short questions. Two of these engines I would like to discuss:

- C-rater by Educational Testing Service (ETS) uses natural language processing (nlp) to assess a student’s answer. It can be used to grade open questions that require a converging answer. It can not handle questions were a student’s opinion or examples from the student’s own experience is required. Even though C-rater corrects for misspelling, use of synonyms, paraphrasing and context it does not reach the same level of reliability of human marking. The exam designer and the answer model builder need to be quite knowledgeable on the C-rater’s mechanisms in order to get a good result. Another drawback is that the system needs up to 100 scored responses to build the answer model. The system is still being improved.

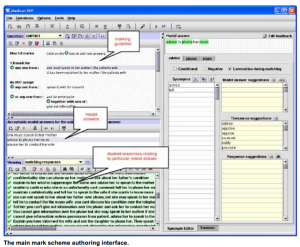

- ExamOnline by Intelligent Assessment Technologies (IAT) uses marking schemes (model answers) that are added by human markers to assess a student’s response. The system provides an exam delivery system and facilitates onscreen grading. The grading is a combination of computer and human marking. Another interesting feature this software offers is the possibility to add hand-drawn sketches to a digital exam. When a sketch answer is required, the system shows the student a unique code that the student needs to fill in on the paper sketch. Afterwards, when the sketches are scanned the system automatically connects the scan to the student’s exam so the grader can assess the sketch onscreen.

I am very curious what OnlineExam can offer, I hope to be able to experiment with this software to see if it might meet our needs.

On top of that, in 2 weeks I hope to get a sneak preview of MapleTA’s new features on essay questions. The current version of MapleTA (8.0) provides a reasonable editor to students answering an essay question, but the grading screen is by far not practical for onscreen grading of short answer questions exceeding 30 words.

Students positive about exam on computer

Review of written exams is a burden to lot of our teaching staff. In a lot of cases the students’ handwriting is hard to read and corrections made by students turn reviewing into a jigsaw puzzle trying to piece together the answers in partially crossed texts, footnotes or by following the arrows.

At TPM a lecturer contacted the IT department, asking about possibilities to have students take their exam on the computer in a secure environment. And so last January and April (re-exams) his students took the exam behind the computer, typing their answers into a protected Word-document in a secure environment: no access to the internet, other applications or network locations. The questions and the articles used in the exam were presented on screen in one and the same Word document. The answers needed to be typed into a text box beneath each question. These text boxes had a fixed size and students were not able to change formatting in these boxes.

Afterwards, a survey was held amongst participants, asking them about their experience with this kind of exams. More than 80% of the participants responded (n=96) and overall their response was positive. In general they preferred typing over writing. Many respondents said it saved time and cramped hands. About 10 percent of the responds stated they preferred a written exam, mostly because they found it harder to read from a computer screen. 39% of the responds stated that they would have preferred to get the articles they had to read on paper, so they could highlight parts of the text or add comments helping them to answer the questions more easy.

When asked if they would prefer other courses to also take exams on the computer, 41% said they like to have more exams on the computer. 52% was positive, but said it should depend on the type of exam (for instance: no sketching or elaborate calculations). Some students mentioned that typing the answers helped them to formulate their answers better, since they could refrase and correct their answers easier and more clear.

An important disadvantage the respondents reported was the noise of typing in the exam room. Some advised to hand out earplugs others advised to install a more silent type of keyboard. Students also preferred having a spelling checker available.

Overall students appreciated this way of examination and they think it suits modern time.